Mastering Home Assistant's Recorder and History: Optimizing Data for Performance and Insight

NGC 224

DIY Smart Home Creator

Mastering Home Assistant's Recorder and History: Optimizing Data for Performance and Insight

Home Assistant logs every state change and event, providing a powerful data stream for automation and analysis. However, unmanaged data can lead to sluggish performance, excessive disk usage, and instability. This guide helps you master the Recorder and History components, ensuring optimal performance, efficient storage, and valuable insights from your smart home data.

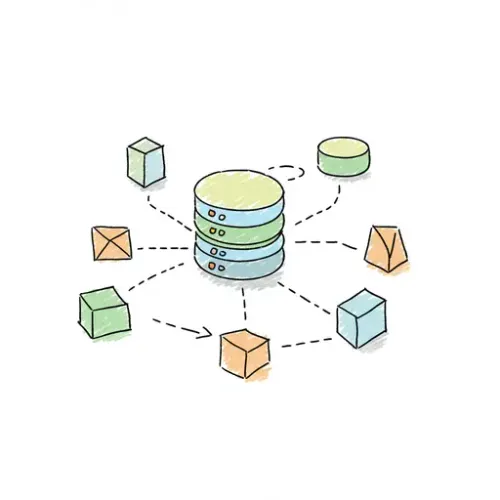

Understanding the Recorder and History

The Recorder logs all state changes and events into a database, defaulting to an SQLite file (home-assistant_v2.db). For larger installations, SQLite can be a bottleneck. The History component then uses this data for historical graphs and logs in the Home Assistant frontend. A well-tuned Recorder is key to a responsive History interface.

Step 1: Strategic Exclusion – Reducing Data Noise

The most effective optimization is to prevent unnecessary data from being recorded. Many entities, especially those with high-frequency updates (e.g., granular power sensors, media player attributes, network stats), can rapidly inflate your database.

Identifying and Excluding Entities:

Use "Developer Tools" -> "Statistics" or the "History" tab to pinpoint chatty entities. Then, configure exclusions in your configuration.yaml:

# configuration.yaml

recorder:

purge_keep_days: 7 # Days to retain history. Critical for database size.

exclude:

entities:

- sensor.processor_use

- sensor.uptime

domains:

- updater

- sun

entity_globs:

- binary_sensor.zigbee_lqi_* # Exclude all LQI sensors

event_types:

- call_service

Best Practice: Exclude entities whose historical values are rarely useful or change too frequently without contributing significant long-term insight. Focus on logging only truly valuable data for your automations and analysis.

Step 2: Database Maintenance and Pruning

Even with exclusions, your database will grow. Home Assistant automatically purges old data based on purge_keep_days, but manual intervention is sometimes beneficial.

Customizing Purge and Manual Purging:

A purge_keep_days of 7-14 days balances insight and performance. For longer retention, consider an external database. To manually purge, use Developer Tools -> Services and call recorder.purge:

service: recorder.purge

data:

repack: true # Reclaims disk space, can be resource-intensive but effective.

Monitor your database size (e.g., /config/home-assistant_v2.db). A template sensor can alert you if it exceeds a threshold.

Step 3: Elevating Performance with an External Database (MariaDB/PostgreSQL)

For larger systems or persistent SQLite performance issues, migrating to an external database like MariaDB or PostgreSQL is highly recommended due to their better concurrency, indexing, and resilience.

Benefits and Setup (MariaDB Add-on Example):

- Performance: Faster queries for large datasets.

- Reliability: More resilient to corruption.

- Scalability: Better for long-term data retention.

Install the "MariaDB" add-on, set a password, and start it. Then, update your configuration.yaml:

# configuration.yaml

recorder:

db_url: mysql://homeassistant:YOUR_PASSWORD@a0d7b954-mariadb/homeassistant?charset=utf8mb4

purge_keep_days: 30 # Can safely increase retention.

Replace `a0d7b954-mariadb` with your add-on's hostname. Restart Home Assistant to start logging to the new database.

Troubleshooting Common Recorder Issues

Slow History or Frontend:

Cause: Large database, too many recorded entities, slow storage. Solution: Aggressively exclude entities, reduce purge_keep_days, migrate to external DB, use fast storage (SSD).

Database Corruption:

Cause: Power outages, improper shutdowns (more common with SQLite). Solution: For SQLite, delete home-assistant_v2.db (losing history). For external DB, restore from backup. Use a UPS for critical systems.

Best Practices for a Robust Smart Home

- Be Selective: Log only truly essential data.

- Monitor: Track disk usage and CPU load.

- Backup: Regularly back up your config and database.

- Hardware: Use fast, reliable storage (SSD).

- Review Data: Periodically check history for overly dense or uninformative graphs.

Real-World Use Cases and Advanced Tips

1. Long-Term Trends via Template Sensors:

Aggregate high-frequency data into meaningful daily totals or peaks using template sensors. Exclude the noisy original sensor and record only the aggregated value. This significantly reduces data points while preserving long-term insights.

# configuration.yaml snippet

template:

- trigger:

- platform: time

at: "23:59:59"

sensor:

- name: "Daily Energy Consumption"

unit_of_measurement: "kWh"

state_class: total_increasing

device_class: energy

state: "{{ states('sensor.main_energy_meter_today') }}"

2. Visualizing Key Metrics:

Utilize the `history-graph` card in Lovelace for quick, responsive visualizations of your optimized entities. A lean database ensures these graphs load swiftly.

# Example Lovelace Card

type: history-graph

entities:

- entity: sensor.temperature_living_room

- entity: sensor.humidity_living_room

hours_to_show: 24

Conclusion

Mastering Home Assistant's Recorder and History is crucial for a performant, reliable, and insightful smart home. By strategically excluding data, maintaining your database, and leveraging external options for scale, you transform a potential bottleneck into a powerful data engine. This ensures your Home Assistant runs smoothly and provides the rich historical context essential for effective smart home management.

NGC 224

Author bio: DIY Smart Home Creator