Achieving Private Voice Control: Integrating Local Components with Home Assistant's Assist Pipeline

NGC 224

DIY Smart Home Creator

The Quest for Private Voice Control

Cloud-based voice assistants like Alexa, Google Assistant, and Siri have revolutionized how we interact with technology. However, their reliance on transmitting voice data to remote servers raises significant privacy concerns for many users. Furthermore, dependence on external servers introduces latency and potential points of failure if the internet connection drops. For smart home enthusiasts who prioritize privacy and local control, finding an alternative is crucial.

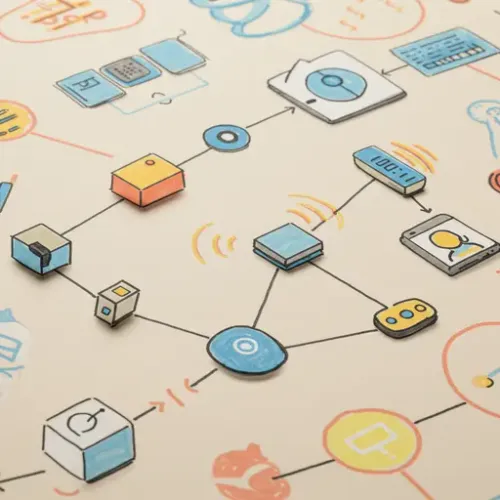

Home Assistant, known for its strong focus on local control and open standards, offers a powerful solution: the Assist pipeline. Introduced to provide a standardized way to handle voice (and text) commands, the Assist pipeline allows users to plug in different components for wake word detection, speech-to-text (STT), intent recognition, and text-to-speech (TTS). This architecture enables building a fully local voice assistant using open-source technologies.

This article will guide you through setting up a local voice control system in Home Assistant, focusing on using popular, open-source components like OpenWakeWord for wake word detection, Piper for text-to-speech, and leveraging the Wyoming protocol for communication between these components and Home Assistant.

Understanding the Home Assistant Assist Pipeline

Before diving into the setup, let's understand the pipeline's flow:

- Audio Input: Your microphone captures audio.

- Wake Word Detection: A component listens for a specific phrase (e.g., "Hey Home Assistant"). If detected, it triggers the next step.

- Speech-to-Text (STT): Another component converts the subsequent speech into text.

- Intent Recognition: Home Assistant's intent engine analyzes the text to understand the user's command (e.g., "Turn on the living room lights").

- Action Execution: Home Assistant performs the requested action based on the recognized intent.

- Text-to-Speech (TTS): Home Assistant generates an audio response (e.g., "Okay, turning on the living room lights").

- Audio Output: The response is played through a speaker.

The beauty of the Assist pipeline is that you can choose different integrations (usually provided as Home Assistant Add-ons or custom components) for each step, creating a personalized and local pipeline.

Prerequisites: Hardware and Home Assistant Setup

To get started, you'll need:

- Home Assistant Installation: Home Assistant OS, Supervised, or Container/Core installation is required. Add-ons are easiest on OS/Supervised.

- Microphone: A USB microphone connected directly to your Home Assistant machine, or a networked audio device (like an ESPHome-based audio node).

- Speaker: A speaker connected similarly, or a media player integrated into Home Assistant (though low-latency responses are best with local speakers).

- Sufficient Resources: Running STT and TTS models locally requires CPU power. A Raspberry Pi 4 or more powerful hardware is recommended.

Setup Steps: Building Your Local Pipeline

We'll use a common setup involving Add-ons for simplicity on Home Assistant OS/Supervised.

Step 1: Install the Assist Pipeline Integration

This is built into Home Assistant. You'll configure it later.

Step 2: Install Required Add-ons (Examples)

Go to Settings -> Add-ons -> Add-on Store. Search for and install the following:

- OpenWakeWord: For local wake word detection.

- Piper: For high-quality, local text-to-speech.

- Wyoming Protocol: This isn't a single add-on but a protocol used by many audio-related add-ons (like OpenWakeWord and Piper). Ensure the add-ons you install support Wyoming.

Install each add-on, start it, and check its logs to ensure it's running without errors. Note the port they are listening on (usually defaults, but check documentation).

Step 3: Configure Assist Pipeline Integrations

Go to Settings -> Devices & Services -> Add Integration.

- Search for and add the OpenWakeWord integration. It should auto-discover the running add-on or ask for the host/port (usually

a0d7b954-openwakewordand the default port 10400 if using the official add-on on OS/Supervised). Configure your desired wake word(s). - Search for and add the Piper integration. Similar to OpenWakeWord, it should find the add-on or require host/port (usually

a0d7b954-piperand port 10200). Choose your preferred voice. - You'll also need an STT engine and an intent recognition engine. Home Assistant includes built-in options. For local STT, you might explore integrations like Vosk (often also available via Wyoming). For intent, the built-in Assist integration is commonly used, powered by the Home Assistant Conversation agent.

Step 4: Configure the Assist Pipeline

Go to Settings -> Voice Assistants -> Pipelines.

- Create a new pipeline or edit the default one.

- Give it a name (e.g., "My Local Pipeline").

- Select your installed components for each stage:

- Wake word: Choose your OpenWakeWord integration.

- STT: Choose your local STT integration (e.g., Vosk).

- Intent: Choose the "Home Assistant" integration.

- TTS: Choose your Piper integration.

- Configure the language for each component (must match).

- Save the pipeline.

- Set your new local pipeline as the default or test it via the "Try out pipeline" button.

Step 5: Configure Audio Hardware (Microphone/Speaker)

This is often the trickiest part. Home Assistant needs access to your audio devices.

- USB Microphone/Speaker: If connected directly to the Home Assistant machine, you might need an add-on like the Audio add-on (for OS/Supervised) to expose the devices over the Wyoming protocol. Configure the Audio add-on to use your specific USB audio device(s).

- ESPHome Audio Devices: Create ESPHome nodes with microphones (like the ESP32-S3 Box or custom setups) and speakers. Configure the ESPHome firmware to integrate with the Home Assistant Assist pipeline using the

voice_assistantcomponent. This is often the most robust distributed solution.

Once your audio hardware is integrated (either via the Audio add-on or ESPHome), go back to Settings -> Voice Assistants -> Assist settings and ensure your audio device is selected under the "Assist microphone" setting for devices running the Home Assistant Companion app or specific hardware.

Device Integration and Automation

With the pipeline configured and audio working, you can now issue commands:

- Using the Home Assistant Companion app's Assist button.

- Using dedicated hardware integrated via ESPHome.

The commands you can give depend on Home Assistant's intent recognition. By default, it understands common phrases related to devices exposed to the "Assist" integration (lights, switches, covers, media players, etc.). You can view and extend these commands:

- Go to

Settings->Voice Assistants->Assist settings. - Explore the "Sentences" tab to see recognized phrases and add your own custom sentences and intents. This allows you to create complex automations triggered by specific voice commands.

For example, you could add a sentence like "Start movie mode" and map it to a custom intent that triggers an automation turning off lights, closing blinds, and turning on the TV.

Tips for a Reliable Smart Home Voice Assistant

- Microphone Quality and Placement: Invest in decent microphones and place them strategically to minimize background noise and capture speech clearly.

- System Resources: Monitor your Home Assistant CPU usage. STT processing, especially, can be resource-intensive. If performance is poor, consider upgrading hardware or distributing the load (e.g., using ESPHome for audio input/output).

- Tuning Wake Word: If using OpenWakeWord or similar, adjust sensitivity settings if you experience too many false positives or missed detections.

- Refine Sentences: The default sentences are a starting point. Regularly check the Assist history (under

Settings->Voice Assistants) to see how your commands are interpreted and refine your custom sentences for better recognition. - Network Stability: If using networked audio devices (like ESPHome), ensure your Wi-Fi or Ethernet connection is stable for low-latency voice processing.

- Backups: As always, back up your Home Assistant configuration regularly.

Conclusion

Setting up local voice control in Home Assistant requires a bit more effort than plugging in a cloud-based smart speaker, but the benefits in terms of privacy, speed, and customization are significant. By leveraging the Assist pipeline and open-source components via the Wyoming protocol, you can build a robust, reliable, and entirely local voice assistant that keeps your data within your home network. Experiment with different components, refine your sentences, and enjoy the power of truly private smart home control.

NGC 224

Author bio: DIY Smart Home Creator