Unlock Deeper Insights: Centralized Logging and Analysis with Home Assistant and the ELK Stack

NGC 224

DIY Smart Home Creator

Your Home Assistant instance generates a wealth of information, recording every event, state change, automation trigger, and crucially, any errors or warnings that occur. While the built-in log viewer is useful for quick checks, it becomes challenging to manage, search, and analyze logs effectively as your smart home grows in complexity.

Imagine trying to pinpoint why a specific automation failed last night, correlate errors across multiple integrations, or understand patterns of device communication issues. Manually sifting through massive log files is time-consuming and inefficient. This is where centralized logging and analysis tools shine.

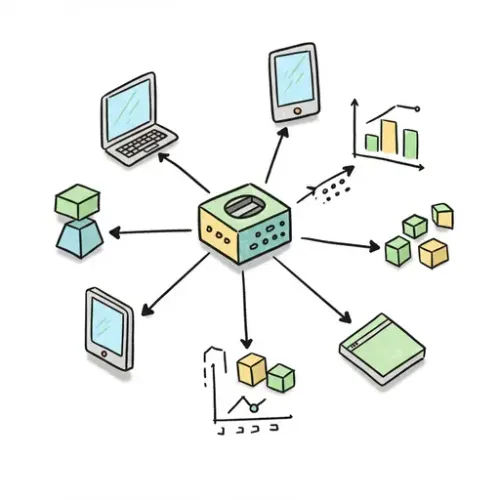

The ELK stack (Elasticsearch, Logstash/Filebeat, and Kibana) is a popular open-source solution for collecting, indexing, and visualizing log data. By piping your Home Assistant logs into an ELK stack, you unlock powerful capabilities like free-text search, historical analysis, trend identification, and sophisticated dashboarding.

Why Centralized Logging for Home Assistant?

- Enhanced Searchability: Quickly find specific events, errors, or messages across vast amounts of log data using powerful query syntax.

- Correlation: Easily see related log entries from different components or devices around the same time.

- Historical Analysis: Look back at past events to identify recurring issues or understand system behavior over time.

- Visualization: Create dashboards to monitor error rates, see which integrations are reporting issues most frequently, or track the volume of different log levels (info, warning, error).

- Proactive Monitoring: Set up alerts based on specific log patterns (e.g., an influx of error messages from a critical integration).

- Simplified Debugging: Get a comprehensive view of system activity leading up to an issue, making troubleshooting much faster.

Introducing the ELK Stack Components

- Elasticsearch: A highly scalable search and analytics engine. It stores and indexes your logs, making them quickly searchable.

- Filebeat (or Logstash): A lightweight data shipper. Filebeat reads log files from your Home Assistant system and forwards them to Elasticsearch. Logstash is a more powerful processing pipeline that can transform, filter, and route logs, but Filebeat is often sufficient and simpler for this use case. We'll focus on Filebeat here.

- Kibana: A data visualization and exploration tool. It provides a web interface to query your Elasticsearch data, create visualizations, and build dashboards.

Setting Up the ELK Stack (Simplified via Docker Compose)

The easiest way to get started with the ELK stack for a Home Assistant setup is often using Docker Compose. This requires Docker to be installed on your server (which could be the same machine running Home Assistant if it's not HA OS/Supervised, or a separate machine like a NAS or mini-PC).

Create a docker-compose.yml file:

version: '7.8'

services:

elasticsearch:

image: elasticsearch:7.8.0

container_name: elasticsearch

environment:

- discovery.type=single-node

- xpack.security.enabled=false # Simple setup, disable security for testing/internal network

- http.cors.enabled=true

- http.cors.allow-origin="*"

- "ES_JAVA_OPTS=-Xms512m -Xmx512m" # Adjust memory as needed

ports:

- "9200:9200"

volumes:

- elasticsearch_data:/usr/share/elasticsearch/data # Persist data

restart: unless-stopped

kibana:

image: kibana:7.8.0

container_name: kibana

environment:

- ELASTICSEARCH_HOSTS=http://elasticsearch:9200

ports:

- "5601:5601"

depends_on:

- elasticsearch

restart: unless-stopped

volumes:

elasticsearch_data: # Define the volume

Note: This Docker Compose setup disables security (xpack.security.enabled=false) for simplicity during initial setup and assumes your network is secure. For production or internet-facing setups, you MUST configure security properly.

Save the file and run docker-compose up -d in the same directory.

Wait a few minutes for the containers to start. You should be able to access Kibana at http://your_server_ip:5601.

Getting Home Assistant Logs into ELK using Filebeat

Now, we need to get Home Assistant's logs. The method depends on your Home Assistant installation type:

- Home Assistant OS/Supervised: Accessing the host filesystem where the logs are stored (typically

/var/log/supervisoror similar) might require advanced configuration or potentially running Filebeat on the host. - Home Assistant Container/Core (venv): The log file is usually located within the configuration directory (

home-assistant.log). If you run HA in Docker, you can mount this log file as a volume to your Filebeat container, or run Filebeat on the host if the config directory is mounted there.

For simplicity and common setups, let's assume you can access the home-assistant.log file from the host machine or a network location accessible by a separate Filebeat container.

Setting up Filebeat

Run Filebeat on the machine where your Home Assistant logs are accessible. This could be the same server as ELK, or a separate machine.

Download and install Filebeat (follow the official Elastic documentation for your OS).

Configure Filebeat by editing the filebeat.yml file. Here's a basic configuration:

filebeat.inputs:

- type: log

enabled: true

paths:

- /path/to/your/home-assistant.log # <-- IMPORTANT: Replace with the actual path!

fields:

log_topic: homeassistant # Add a field to identify the source

#-------------------------- Elasticsearch Output --------------------------

output.elasticsearch:

hosts: ["your_elk_server_ip:9200"] # <-- IMPORTANT: Replace with your Elasticsearch IP and port

# username: "elastic" # Uncomment and set if you enabled security

# password: "changeme" # Uncomment and set if you enabled security

#============================== Filebeat modules ===============================

# We are not using modules here, just tailing a custom log file.

# You can disable or remove the modules section if desired.

#============================== Logging ===============================

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

Explanation:

filebeat.inputs: Defines where Filebeat should look for logs. We use theloginput type.paths: Specify the absolute path to yourhome-assistant.logfile. This is crucial and will vary based on your installation.fields: Add a custom field (log_topic: homeassistant) to make it easy to identify HA logs in Kibana.output.elasticsearch: Configures Filebeat to send logs to your Elasticsearch instance. Replaceyour_elk_server_ipwith the actual IP or hostname.

Save the filebeat.yml file.

Start Filebeat (the command depends on your OS and installation method, often something like sudo service filebeat start or sudo systemctl start filebeat or running the binary directly). Check Filebeat logs for errors.

Exploring Home Assistant Logs in Kibana

Once Filebeat is running and sending data, go back to your Kibana interface (http://your_server_ip:5601).

- Create an Index Pattern: In Kibana, navigate to 'Management' -> 'Stack Management' -> 'Kibana' -> 'Index Patterns'. Click 'Create index pattern'. Filebeat typically sends data to indices named like

filebeat-YYYY.MM.DD. Typefilebeat-*into the 'Index pattern name' field and click 'Next step'. For the 'Time field', select '@timestamp'. Click 'Create index pattern'. - Discover Logs: Go to the 'Analytics' -> 'Discover' tab. You should now see your Home Assistant log entries appearing. You can filter by your custom field (e.g., searching for

log_topic: homeassistant) to see only HA logs. - Search and Filter: Use the search bar at the top to query your logs. Examples:

level: ERROR: Find all error messages.message: "timeout": Find logs containing the word "timeout".level: WARNING and log_topic: homeassistant: Find warnings specifically from Home Assistant.entity_id: sensor.my_problem_sensor: If your logs include entity IDs, you could search by them. (This requires more advanced parsing which is beyond this basic setup, but possible with Logstash or Ingest Pipelines).

- Visualize: Navigate to 'Analytics' -> 'Visualize'. You can create various visualizations like bar charts showing log levels over time, pie charts breaking down logs by component, etc.

- Dashboard: Go to 'Analytics' -> 'Dashboard' to combine multiple visualizations and searches into a single view for quick monitoring.

Device Integration and Best Practices

Centralized logging enhances your ability to manage and debug devices and integrations:

- Debugging Flaky Devices: If a device occasionally drops offline or behaves erratically, check the logs in ELK around the time of the issue. Search for the device's name, entity ID, or the name of the integration managing it. Look for patterns of errors or warnings that might indicate communication problems (timeouts, connection refused, malformed data).

- Identifying Integration Issues: Filter logs by specific integration names to see if one particular integration is generating excessive errors or warnings. This helps identify misconfigured or unstable integrations.

- Monitoring Automation Failures: While not all automation failures generate errors, specific steps or conditions that fail might log relevant messages. Searching for keywords related to your automation or the entities involved can provide clues.

- Resource Monitoring Clues: High error rates or specific warning messages (e.g., related to database performance or network issues) visible in logs can sometimes correlate with system slowdowns or unreliability, guiding you towards performance bottlenecks.

- Alerting: Once comfortable with searching, explore Kibana's alerting features (requires enabling X-Pack, even the basic features in free versions) or use external tools to monitor Elasticsearch indices for specific patterns (e.g., trigger an alert if more than 10 ERROR level logs occur in 5 minutes from a critical component).

- Log Volume Management: ELK can consume significant disk space. Configure Elasticsearch index lifecycle management policies to automatically delete older indices after a set period (e.g., 30 or 60 days). Also, consider adjusting Home Assistant's logging level in its

configuration.yamlif certain components are too verbose (e.g., set noisy components towarningorerror). - Security: If accessing your ELK stack over a network, especially outside your local network, configure security (users, roles, TLS/SSL) in Elasticsearch and Kibana. The simple setup above is NOT secure for untrusted networks.

Conclusion

Integrating Home Assistant logs with a centralized logging solution like the ELK stack transforms your ability to monitor and debug your smart home. While the initial setup might require some technical effort, the ability to quickly search, analyze, and visualize your system's activity will save you countless hours of frustration when troubleshooting issues and provide invaluable insights into the reliability of your ecosystem. Start by getting your logs flowing, explore the data in Kibana, and gradually build dashboards and searches tailored to your specific needs. Your future self trying to debug a mysterious issue will thank you.

NGC 224

Author bio: DIY Smart Home Creator